Introduction

Stephen Hawking once said, “The development of full AI could end the human race.” Also, Elon Musk feels that AI is the human race’s “biggest existential threat.” Many such stalwarts share a similar opinion about AI. Is AI so dangerous or just speculation that is farfetched? AI has been integrated into everyday life without us even realizing it. For instance, when using Siri on our iPhone or Waze to get directions to a location, do we know the mechanisms and tools used to develop these intelligent systems? Building trust is crucial for adopting AI. At what stage should we begin to question whether we trust AI or not? Let’s dig more and try to find the correct answer.

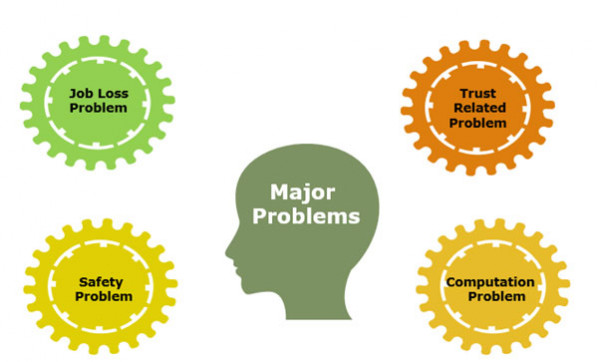

Problems of AI & possible solutions

Job loss problems

Increased job loss due to AI has been an important discussion topic in many companies and academic studies. As per an Oxford study, 47% and above of US jobs will be under serious threat due to AI deployment by 2035. According to the World Economic Forum, AI automation may replace more than 75 million jobs by 2022. Lower-income and lower-skilled employees will be the worst affected by AI growth. As AI becomes increasingly smarter, the high salaried and high-skill employees will become more prone to job losses. So the job loss threat is quite real. AI can execute the same job at a significantly lower cost and will not ask for any salary.

Possible solutions

- Making prominent changes in the education system & giving more emphasis on teaching skills such as critical thinking, innovation, and creativity, which are hard to replicate by AI.

- More public and private funding in building human capital so that they are much better aligned with industry demand.

- Improving the labor market conditions by matching the demand-supply gap and giving more importance to the gig economy.

Safety problems

There have always been a lot of outcries about safety problems related to AI. When celebrities such as Bill Gates, Elon Musk, Stephen Hawking, etc., have expressed concern over AI safety, we should definitely pay attention. Unfortunately, there have been many incidents where AI has given the wrong outcomes. Ex: Twitter Chabot had started spreading abusive & Pro-Nazi sentiments. In another instance, Facebook AI bots began interacting among themselves in a language not understandable to anyone, ultimately causing the project to be terminated.

There are grave concerns about AI doing some harm to humanity. Ex: autonomous weapons can be programmed to kill human beings. There are also imminent threats of AI developing a “Mind of their Own” and may not follow the instructions humans give. If such weapons become prominent, it will be very challenging to undo their consequences.

Possible solutions

- We have to impose strong regulations, especially for the development or experimentation of autonomous weapons in the field.

- Global cooperation and policies on issues related to such weapons are required to make sure no country gets greedy in the race to develop the best autonomous weapons.

- Full transparency in the system where AI technologies are adopted or experimented with is crucial to ensure safe usage.

Trust related problems

As AI algorithms become stronger by the day, it also raises numerous trust-related problems regarding their ability to make fair decisions that can improve human life. The trust issue becomes more significant with AI slowly reaching toward matching the cognitive abilities of the human brain.

There are numerous applications where AI works like a black box. Ex: In high-frequency trading, programmers have a poor understanding of the basics on which AI will execute the trade. Some more provoking examples include Amazon’s AI-based algorithm for same-day delivery, which is somehow biased against black neighborhoods. One more example was COMPAS (Correctional Offender Management Profiling for Alternative Sanctions), where the AI algorithm, while profiling suspects, seems biased towards the black community.

Possible solutions

- All the top AI service providers need to set up guidance and principles concerning trust and transparency in AI deployment. These rules must be meticulously followed by each stakeholder involved in AI development and usage.

- All the AI company owners must be aware of the bias created by AI algorithms inherently and should have strong bias detection technology and methods to manage them.

- Creating awareness is another crucial factor that plays a significant role in reducing trust issues. Therefore, the users should clearly understand the AI operations, its abilities, and the shortcomings associated with it.

Computation problems

AI algorithms include the analysis of enormous amounts of data that needs great computational power. So far, the issue has been dealt with the assistance of parallel processing and cloud computing. However, as more data is added, the more complicated the deep learning algorithm will be. As it gets into the mainstream, the current computational power may not be enough to manage the complex requirements. We might require more storage space and computational ability to handle crushing exabytes and zettabytes of data.

Possible solutions

- Quantum computing can eliminate the processing speed issues in the medium to long term.

- Quantum computing based on Quantum theory might be the viable solution to solve computation power limitations.

- Quantum computing is about 100 million times faster than a common computer we currently use at home or office. Anyway, it is presently in the research and experimental phase. As per the estimation given by various experts, we can see its large-scale deployment in the next 10 to 15 years.

Conclusion

When it is done efficiently and safely, AI adds tremendous value to our lives and companies. As AI is directly or indirectly involved in our everyday lives and its usage is also increasing like never before, we need to make sure that we can develop it to be trusted and safe for the well-being of everyone. As time passes, trust and safety in AI are becoming paramount and emphasized. The AI providers have become much more cautious while creating their AI models. They are following guidelines and frameworks to ensure they are developing a trustworthy AI model.

FAQs

1. What are the key features of a trusted AI?

A: The key features of a trusted AI are,

- Assurance

- Explainable

- Performant

- Legal & Ethical.

2. How did Capgemini manage to build a trusted AI?

A: Capgemini realized that there was a big issue of not trusting AI, which could sway the stakeholders away from investing in the business. Hence, Capgemini developed an “ethical AI life cycle” framework with clear checkpoints, forming a trustworthy AI.

3. Who is termed as responsible if something goes wrong with an AI system?

A: The main people held responsible if something goes wrong with an AI system are

- The Developer

- The Trainer

- The Operator

4. What are the main areas of concern that must be perfect for users to trust AI?

A: The main areas of concern that must be perfected for users to trust AI are

- Performance

- Process

- Purpose

- Fairness

- Traceability.

5. How can we eliminate AI bias?

A: There are four ways recommended by technology and marketing experts to mitigate or at least minimize AI bias.

- Conduct a review of the AI training data

- Check and recheck AI’s decision making

- Gather direct input from the customers

- Constantly monitor to prevent AI bias.

6. Why is fairness crucial in AI?

A: Any decision-making system, including AI, can show bias towards certain factors and hence must be assessed for fairness. Fairness in AI is tested by validating the bias against pre-established ethical principles.

7. Which are the top-performing AI companies in 2022?

A: The top-performing AI companies in 2022 were

- Amazon Web Services

- Google Cloud Platform

- IBM Cloud

- Microsoft Azure

- Alibaba Cloud.