Introduction:

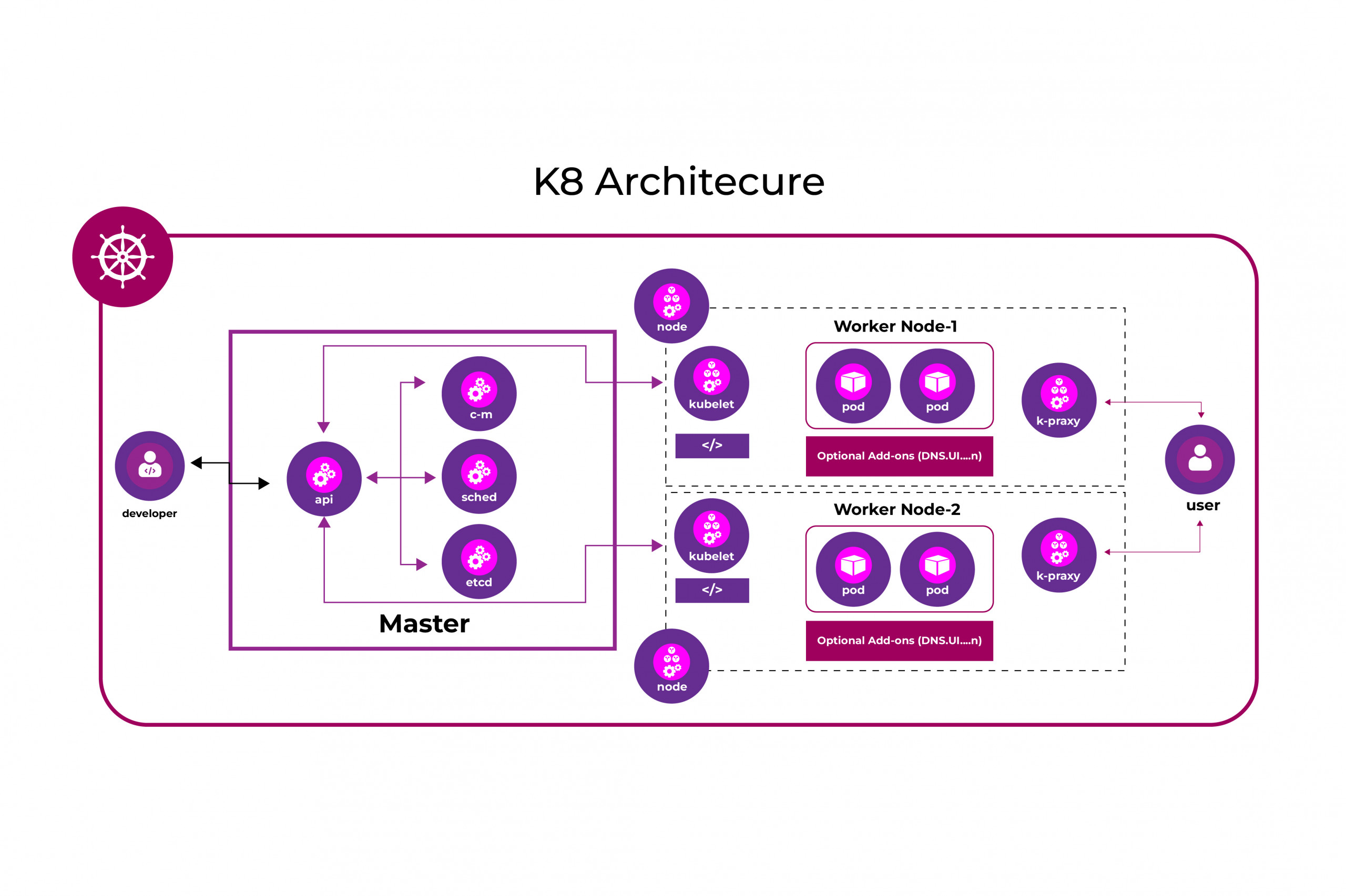

Kubernetes has become the go-to container orchestration tool for managing containerized applications in a cluster environment. Kubernetes, or K8s for short, has revolutionized the way we manage containerized applications in a cluster environment. It provides a powerful set of tools for automating deployment, scaling, and management of containerized applications, but with great power comes great responsibility. Once the application is deployed in a container environment, there is a further need for scalability, reliability, and orchestration to interact with and manage the application across multiple containers. Kubernetes (often abbreviated as K8s) is a container orchestration framework for containerized applications. Consider Kubernetes as a shipping Port that manages all communication, scheduling, and loading of your containers on available, resourceful ships. However, the power of Kubernetes comes with the responsibility to manage complex deployments and configurations. As such, encountering errors or issues when deploying applications in Kubernetes is common. This white paper aims to address 12 common errors that Kubernetes users encounter and provide tools to help mitigate them. From simple misconfigurations to complex architectural issues, we will delve into the key concepts behind each error and provide case analyses of real-world scenarios. We will also offer practical examples of how to fix these errors, giving readers a better understanding of how to handle the most common issues in Kubernetes. By the end of this paper, readers will be better equipped to navigate and troubleshoot errors in Kubernetes deployments.

Key Takeaways:

- Kubernetes is a powerful container orchestration platform that can help DevOps teams deploy and manage containerized applications at scale.

- Kubernetes deployments can be complex and error-prone, requiring DevOps teams to be well-versed in Kubernetes best practices and troubleshooting techniques.

- Common errors in Kubernetes deployments can include issues with pod labels, port specifications, log analysis, pod health, resource usage, load balancing, DNS configuration, node scheduling, ingress configuration, image accessibility, and resource constraints.

- To address these errors, DevOps teams can implement solutions such as label and selector verification, port and resource specification checks, log analysis and monitoring, pod health checks, load balancer consolidation, DNS configuration verification, node scheduling configuration, ingress controller configuration checks, container registry verification, and resource availability and constraint checks.

Main Content:

In this section, we provide a case analysis of a hypothetical Kubernetes deployment and outline potential errors that can arise during the deployment process, along with corresponding solutions.

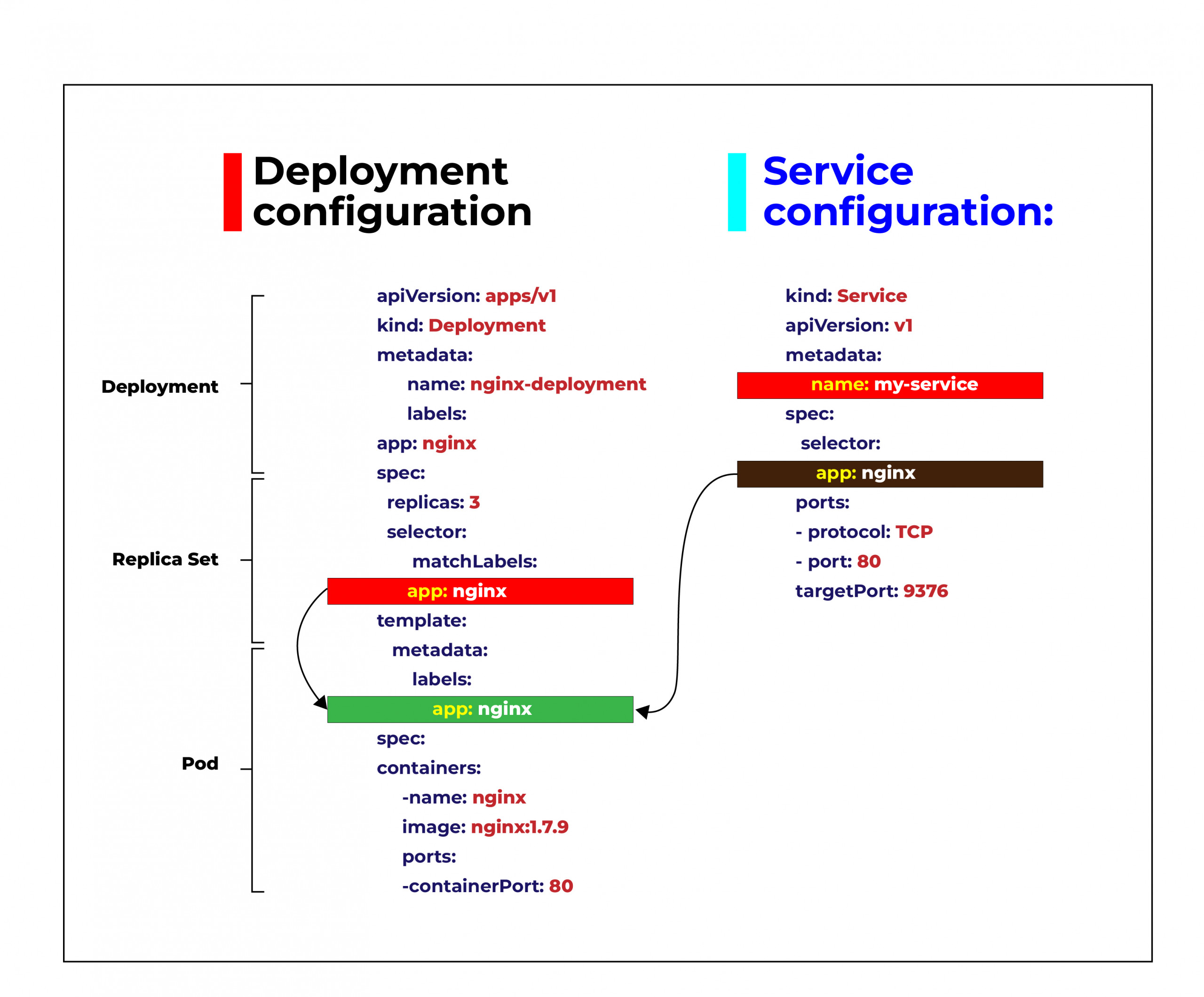

Error 1: Pod labels do not match selectors in the service manifest.

Reason: The labels on the pods must match the selectors in the service manifest to ensure that the service can route traffic to the pods

Solution: Verify that the labels on the pods match the selectors in the service manifest. For more information, see Kubernetes documentation on service selectors.

Labels and Selectors | Kubernetes

Error 2: Incorrect port specification in the container definition and service manifest.

Reason: The port specification in the container definition and service manifest must match to ensure that the service can route traffic to the correct port on the pod.

Solution: Ensure that the correct port is specified in the container definition and service manifest. For more information, see Kubernetes documentation on service ports.

Exposing Applications for Internal Access | Kube by Example

Error 3: Pods are not healthy or ready to receive traffic.

Reason: Pods must be healthy and ready to receive traffic to ensure that the service can route traffic to the pods.

Solution: Configure appropriate probes to ensure that the pods are healthy and ready to receive traffic. For more information, see Kubernetes documentation on probes.

Configure Liveness, Readiness and Startup Probes | Kubernetes

Error 4: Resources requested and limits set for the pods are not appropriate for their workload.

Reason: The resources requested and limits set for the pods must be appropriate for their workload to ensure optimal performance.

Solution: Ensure that the resources requested and limits set for the pods are appropriate for their workload. For more information, see Kubernetes documentation on resource management.

Resource Management for Pods and Containers | Kubernetes

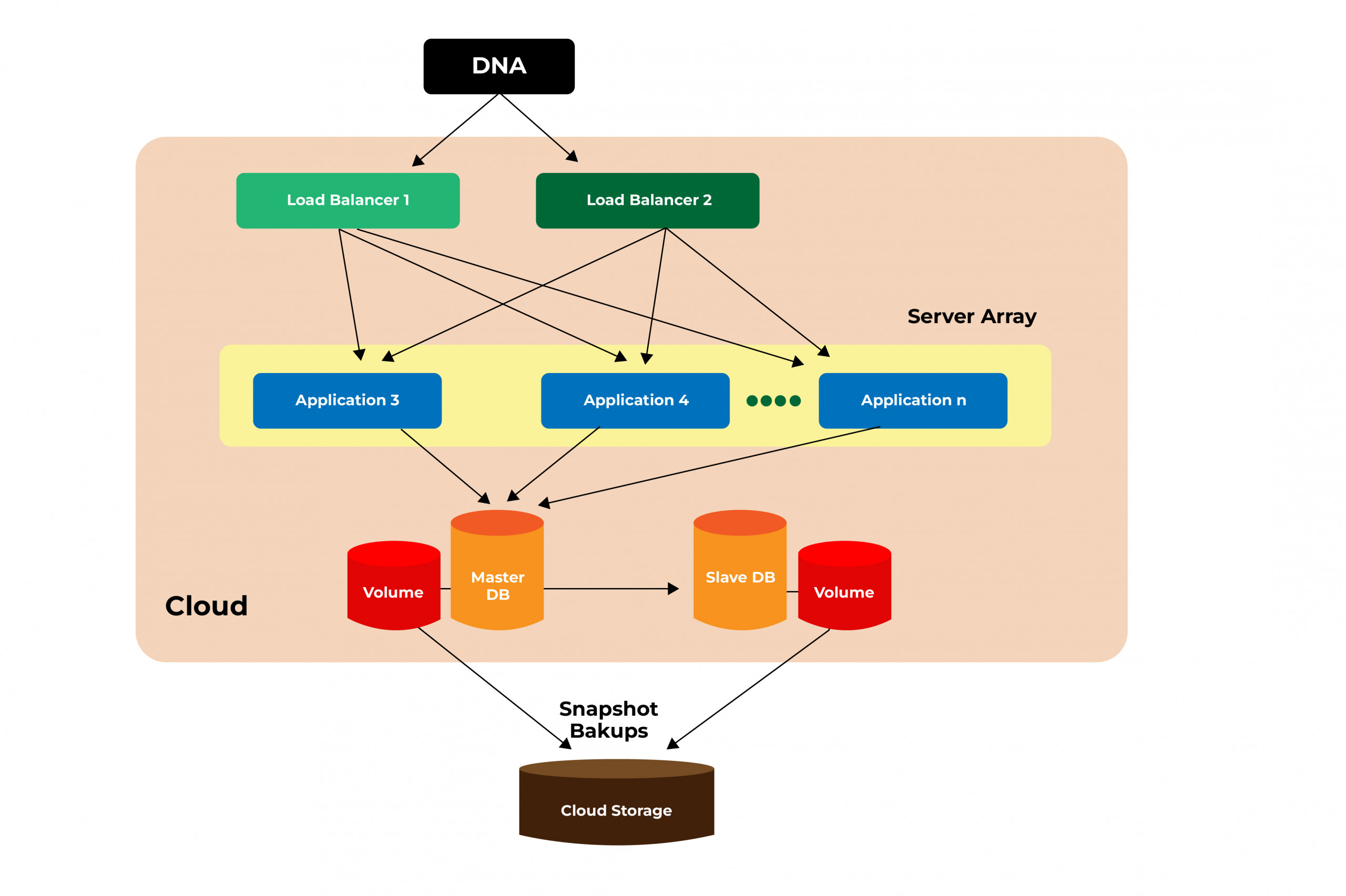

Error 5: Multiple load balancer services are consuming excessive resources.

Reason: Multiple load balancer services can consume excessive resources, impacting the performance of the deployment.

Solution: Consolidate load balancer services to reduce resource usage. For more information, see Kubernetes documentation on load balancing.

What Is Load Balancing? How Load Balancers Work

Error 6: The DNS service is not correctly configured, resulting in issues with resolving domain names.

Reason: Kubernetes relies on DNS to discover and connect to services. If the DNS service is not configured correctly, it can lead to issues with service discovery and connectivity.

Solution: Verify that the DNS service is correctly configured by checking the Kubelet configuration file and the DNS pod logs. Ensure that the DNS service IP address is specified correctly in the Kubelet configuration file and that the DNS pod is running correctly. Additionally, check that the DNS service is reachable from within the cluster by running a DNS lookup command.

Debugging DNS Resolution | Kubernetes

Error 7: Pods are not being scheduled on the desired nodes, resulting in resource imbalances and performance issues.

Reason: Kubernetes uses node scheduling to determine which nodes to deploy pods on. If node scheduling is not configured correctly, pods may not be scheduled on the desired nodes, resulting in resource imbalances and performance issues.

Solution: Configure node affinity or anti-affinity to ensure that pods are scheduled on the desired nodes. Node affinity and anti-affinity are used to specify rules for pod placement based on node attributes such as labels, taints, and tolerations. By configuring node affinity or anti-affinity, you can ensure that pods are deployed on the appropriate nodes to achieve optimal performance and resource utilization.

Resource Management for Pods and Containers | Kubernetes

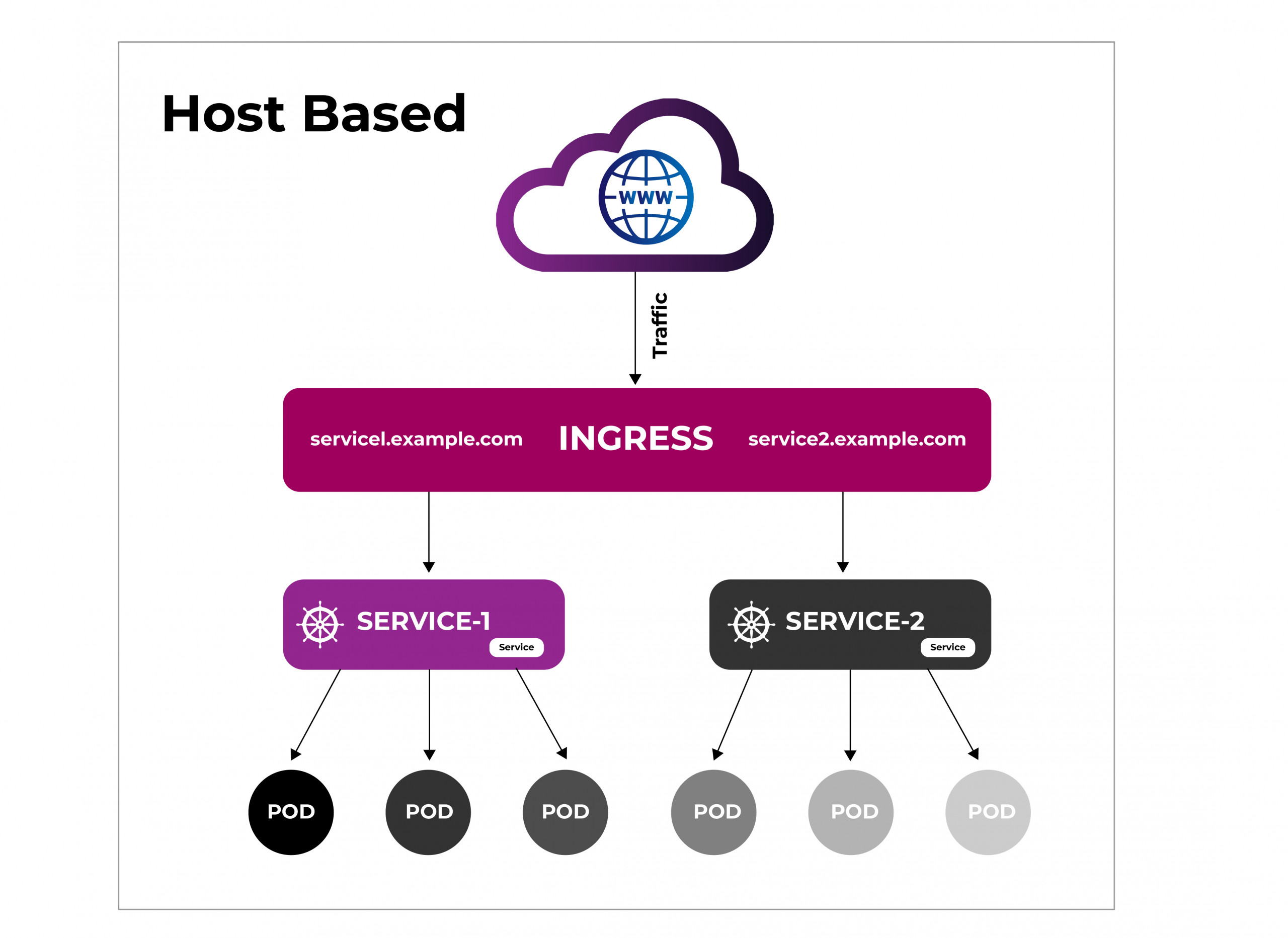

Error 8: The ingress controller is not configured correctly, resulting in issues with routing traffic to services.

Reason: Kubernetes uses ingress controllers to route external traffic to services within the cluster. If the ingress controller is not configured correctly, it can lead to issues with routing traffic to services.

Solution: Check the configuration of the ingress controller to ensure that it is correctly configured. Verify that the ingress controller is deployed and running correctly, and that the necessary ingress resources are defined in the Kubernetes manifest. Additionally, check that the ingress rules are defined correctly and that the ingress controller is reachable from outside the cluster.

Troubleshooting Common Issues | NGINX Ingress Controller

Error 9: Authentication issues with the container registry, resulting in issues with pulling container images.

Reason: Kubernetes relies on container registries to store and distribute container images. If the container registry authentication credentials are not configured correctly, it can lead to issues with pulling container images.

Solution: Check the container registry credentials and ensure that they are correctly configured in the Kubernetes manifest. Additionally, verify that the container images are available and accessible from the container registry. If authentication issues persist, consider using a private container registry or a container image repository that supports the Kubernetes authentication mechanism.

Troubleshooting | Container Registry documentation | Google Cloud

Error 10: Insufficient resources are available on the cluster, resulting in issues with deploying and scaling applications.

Reason: Kubernetes requires sufficient resources to deploy and scale applications. If there are insufficient resources available on the cluster, it can lead to issues with deploying and scaling applications.

Solution: Check the resource availability and constraints on the cluster. Ensure that there are sufficient compute resources, storage resources, and network resources available to support the application workload. If resource constraints persist, consider scaling up the cluster or optimizing resource utilization through resource quotas, resource limits, or horizontal pod autoscaling.

Autoscaling in Kubernetes

Error 11: An error occurred during the deployment process, and the new version of the application is not functional.

Reason: Errors can occur during the deployment process, even with proper configuration and testing. In some cases, these errors may not be immediately apparent and may result in the new version of the application not functioning correctly.

Solution: Rollback to the previous version of the deployment manifest and troubleshoot the issue. By rolling back to the previous version, you can ensure that the application remains functional while you investigate and resolve the error. Additionally, use monitoring and logging tools to identify and troubleshoot the error, and incorporate the lessons

Monitoring, Logging, and Debugging | Kubernetes

Error 12: When deploying a new pod, Kubernetes may not be able to allocate sufficient resources to the nodes due to resource constraints. This can lead to pod evictions or the inability to schedule new pods.

Reason: The resource requirements for pods may be higher than the available resources on the nodes. This can occur due to high resource usage by other pods, insufficient resources on the nodes, or incorrect resource requests and limits set for the pods.

Solution: There are several solutions to address insufficient node resources in Kubernetes:

- Increase the resources available on the nodes by adding more nodes to the cluster or upgrading the existing nodes.

- Optimize the resource usage of existing pods by reducing resource requests and limits or using resource quotas to limit resource usage.

- Use Kubernetes autoscaling to automatically scale up or down the nodes based on the resource usage of the pods.

- Use node selectors or affinity rules to schedule pods on nodes with sufficient resources.

Node-pressure Eviction | Kubernetes

Error 13: Inadequate Cluster Security

Reason: Inadequate cluster security can lead to unauthorized access to sensitive data and malicious attacks on the cluster. Common causes of inadequate security include a lack of authentication and authorization mechanisms, weak or default passwords, and misconfigured network policies.

Solution: To address inadequate cluster security, DevOps teams should implement best practices for securing the Kubernetes cluster. This includes:

- Implementing strong authentication and authorization mechanisms, such as role-based access control (RBAC), to restrict access to sensitive resources

- Enforcing strong password policies and disabling default accounts and passwords.

- Implementing network policies to restrict traffic between pods and nodes, and between pods in different namespaces

- Regularly updating and patching the Kubernetes software to address known security vulnerabilities

- Enabling logging and monitoring tools to detect and respond to security incidents.

- Performing regular security audits and vulnerability assessments to identify and address potential security risks.

By implementing these best practices, DevOps teams can ensure that their Kubernetes cluster is secure and protected from potential security threats.

Securing a Cluster | Kubernetes

Challenges

Despite the numerous benefits of using Kubernetes, there are also some challenges that organizations may face while deploying and managing their applications on this platform. Some of the common challenges are:

Complexity: Kubernetes is a complex system with a steep learning curve. It requires a thorough understanding of the platform’s architecture, concepts, and components.

Resource Management: Kubernetes provides features for resource management, such as resource requests and limits, but it can be challenging to get these values right to ensure optimal performance and resource utilization.

Scalability: While Kubernetes is designed to be highly scalable, achieving and maintaining scalability requires careful planning and execution.

Network Configuration: Kubernetes provides a wide range of networking features, including DNS, load balancing, and service discovery, but configuring these features can be complex and challenging.

Security: Securing a Kubernetes deployment can be challenging, as there are many attack vectors that need to be considered, such as the API server and the container runtime.

Upgrades: Kubernetes upgrades can be challenging, especially when upgrading across multiple versions. Organizations need to plan and test upgrades carefully to avoid service disruptions.

Overcoming these challenges requires careful planning, execution, and ongoing management. It is essential to have a team with a deep understanding of Kubernetes and its components, as well as experience in deploying and managing applications on this platform. Additionally, using tools and services that provide automation and simplification can help organizations streamline their Kubernetes operations and ensure that their applications run smoothly.

Endnotes

- Kubernetes documentation. (2021). Kubernetes. Retrieved from https://kubernetes.io/docs/home/

- Burns, B., & Vohra, R. (2016). Kubernetes: Up and Running. ” O’Reilly Media, Inc.”.

- Crawford, J., & Mclaughlin, K. (2021). Mastering Kubernetes: Level up your container orchestration skills with Kubernetes to deploy, manage, and scale applications. Packt Publishing Ltd.

- Docker documentation. (2021). Docker. Retrieved from https://docs.docker.com/

- Garrett, M. (2018). Practical Guide to Kubernetes: Implementing container orchestration. Addison-Wesley Professional.

- Gormley, C., & Tong, Z. (2015). Elasticsearch: The Definitive Guide: A Distributed Real-Time Search and Analytics Engine. ” O’Reilly Media, Inc.”.

- Hightower, K., Burns, B., & Beda, J. (2017). Kubernetes: Up and Running: Dive into the Future of Infrastructure. ” O’Reilly Media, Inc.”.

- Kubernetes: The Complete Guide. (2021). Udemy. Retrieved from https://www.udemy.com/course/kubernetes-complete/

- Kubernetes: Up and Running: Dive into the Future of Infrastructure. (2021). Amazon. Retrieved from https://www.amazon.com/Kubernetes-Running-Dive-Future-Infrastructure-ebook/dp/B08M4X9H4D

- Learning Kubernetes. (2021). LinkedIn Learning. Retrieved from https://www.linkedin.com/learning/learning-kubernetes-2/

- Liguori, A. (2018). Cloud Native Infrastructure: Integrate infrastructure with Docker, Kubernetes, and OpenStack. Packt Publishing Ltd.

- Luksa, M. (2017). Kubernetes in Action. Manning Publications Co.

- Minikube documentation. (2021). Kubernetes. Retrieved from https://minikube.sigs.k8s.io/docs/

- Newman, S. (2015). Building Microservices: Designing Fine-Grained Systems. ” O’Reilly Media, Inc.”.

- Rensin, D. (2017). The DevOps Handbook: How to Create World-Class Agility, Reliability, and Security in Technology Organizations. ” O’Reilly Media, Inc.”.

- Saffron, J. (2018). Effective DevOps with AWS: Implement continuous delivery and integration in the AWS environment. Packt Publishing Ltd.

- Stein, D., & White, B. (2019). Kubernetes Cookbook: Building Cloud-Native Applications. ” O’Reilly Media, Inc.”.

- The Complete Kubernetes Guide: Hands-On From Deployment To Scaling. (2021). Udemy. Retrieved from https://www.udemy.com/course/the-complete-kubernetes-course/

- The Kubernetes Book. (2021). Kubernetes. Retrieved from https://www.kubernetesbook.com/

- Vohra, R. (2019). Cloud Native Kubernetes: Develop, deploy, and manage container-based applications at scale with Kubernetes. Packt Publishing Ltd.